Regardless of when or where they happen, invisible gas leaks are difficult and time-consuming to detect, especially when using outdated inspection methods, and when there are many components to inspect.

As previously discussed in detail in our earlier blog, there has been a major shift in how the government will regulate methane and other greenhouse gas emissions that contribute to global warming. Specifically, we covered the EPA’s New Source Performance Standards (NSPS) “OOOOb” and Emissions Guidelines (EG) OOOOc. These updates are additions to the 2015 regulations known as “OOOOa,” which first established optical gas imaging (OGI) as the best system of emission reduction. EPA legislation and guidance are greatly impacting leak detection and the Oil and Gas (O&G) industry to protect the environment.

To match the recent regulations, affected O&G organizations need to ensure that they have the right personnel, training, and tools to complete the mission, especially handheld gas detection tools that will be able to quickly detect and measure leaks.

With this in mind, it is fitting to introduce the new FLIR G-Series Optical Gas Imaging (OGI) cameras. The FLIR G-Series features a family of high-tech, cooled-core OGI cameras that can help leak detection and repair (LDAR) professionals seamlessly locate, quantify, and document harmful gas emissions.

With its purpose-built approach and new features, FLIR OGI cameras enable inspectors to detect leaks faster and pinpoint the source immediately, leading to prompt repairs, reduced industrial emissions, and greater conformity to regulations.

Importantly, the FLIR G-Series now supports quantification analytics with onboard GPS that accurately measures the type and size of leaks, eliminating the need for a secondary device. This reduces time in the field and supports the documentation now required.

BUILT-IN DELTA T CHECKS

One of the first elements of legislation reviewed was the definition of Delta T and the ramifications of Appendix K. Delta T, shorthand for the difference in temperature between the background temperature of the scene viewed by the camera and the emitted temperature of the gas (assumed to be ambient temperature), sets up the inspector to find the severity criteria. The delta must be adequate enough to effectively visualize the leaking emission.

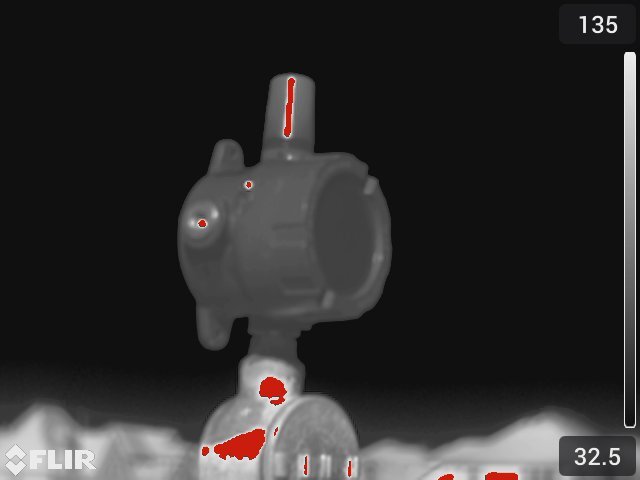

Separating pixel and component level Delta T is necessary or the inspector could miss a number of leaks. FLIR has placed a quick and simple button on the camera, making “delta t check” a push button function. The camera then highlights every pixel that doesn’t meet the Delta t, providing a full picture versus a bounding box. Predecessor cameras, dating back to the previous decade, and even some newer cameras in the market may only apply a single spot radiometer, measuring a large area (e.g., a 10’x10’ box) to compare the background to the ambient temperature. This process could give an operator false trust that the Delta T function has worked accurately and they then miss leaks since it is based on a full scene averaging a large area for the measurement rather than on a pixel level, allowing for component level assurance that the inspection is accurate.

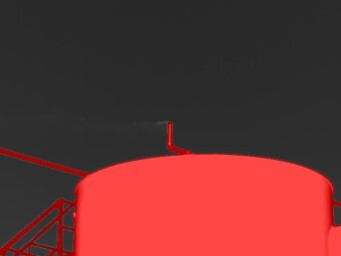

The image on the left shows a tank with large areas of Delta T challenges. If this leak were moving down (rather than from right to left) from the vent line, it would not be detected by an OGI camera. The image on the right from a FLIR Gx320 camera shows how true Delta T check should work, highlighting specific pixels that do not have sufficient Delta T and allowing an operator to ensure proper inspection of all components.

FLIR ROUTE CREATOR

Designed for thermographers who inspect large numbers of objects, FLIR Inspection Route software guides the user along a predefined route of inspection points built in FLIR Thermal Studio Pro using the Route Creator plugin. This helps inspectors follow a logical route to collect images and data in a structured manner. It also speeds up post-processing and reporting. EPA standards now require inspecting components for a specific number of seconds, and the FLIR route creator can help to ensure such timing.

FLIR records GPS coordinates along the survey path, delivering a highly detailed document for the EPA, showing exactly where the inspector walked around—all built into the camera. Route creator pops up and gives the operator guidance to better understand how many components are in the inspection scene with predefined stops within the route. This becomes very convenient, ensuring all points and steps in the inspection plan are covered. When an inspector has a chain of components to observe, they can ensure their inspection meets requirements of the regulation.

PHOTOGRAPHIC EVIDENCE

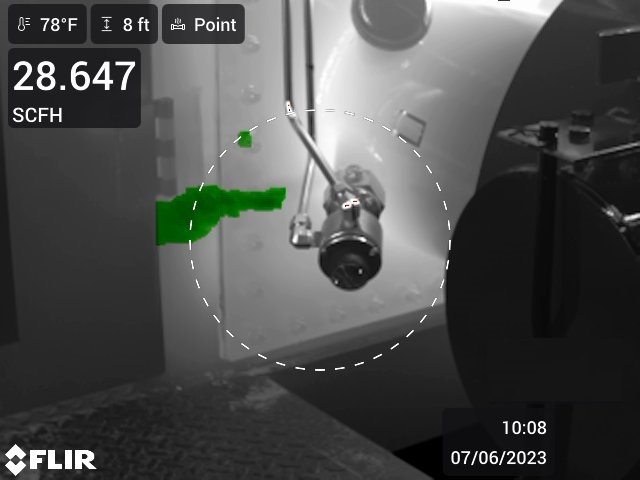

Regulations also now require a short video clip or a photograph depicting the leak and the component associated with the leak. Historically, it was a challenge to easily depict where the leak was coming from without using a video. With FLIR’s new sketch on IR feature, the inspector simply clicks to take an IR image and can draw an arrow on top of an overlay with GPS date and time to satisfy the requirement. The G-series cameras have built-in GPS logging capabilities to show the exact monitoring survey path taken with the camera.

The image on the left shows that with FLIR’s new Gx320 camera, an operator can take an IR image instead of a video and use the touchscreen LCD to draw an arrow depicting where the leaking component is faulty. The image on the right demonstrates what on-camera quantification looks like with FLIR QOGI.

QUANTIFICATION

Leak quantification greatly helps provide more accurate quantification metrics as required in parts of Subpart W, which is still in draft form. With FLIR, inspectors can see what's happening and have situational awareness of the emission to exactly where a repair technician needs to go to remedy it.

While new regulations do not specify QOGI as the quantification tool, there is an opportunity for operators to use it as a baseline for some of the regulations. Specifically, getting ahead of the new Waste Emissions Charge (WEC) as part of the Inflation Reduction Act (IRA). WEC for methane applies to natural gas and petroleum facilities that emit more than 25,000 metric tons of CO2 equivalent per year as reported under Subpart W of the Greenhouse Gas Reporting Program.

CONCLUSION

O&G professionals are best served to steep themselves in the latest EPA regulations and guidance, as FLIR has detailed within its recent blogs. This is in addition to knowing what tools are available, as the process of recording inspection activities must now be performed at a higher level of detail and accuracy. Furthermore, memorializing the testing process and results down to details like GPS coordinates for specific sets of components has become essential to the inspection lifecycle. On the contrary, using outdated tools and cameras that lack necessary controls, software, and reliable wireless connectivity for proper reporting will likely result in severe productivity losses, aside from the organization not becoming aware of gas leaks that will prevent the organization from meeting the latest standards.

Source: FLIR.IN